On June 23, 2025, the U.S. District Court for the Northern District of California issued a landmark decision in Bartz v. Anthropic PBC, a case that will shape the future of AI training and copyright law.

At the center of the case was the following question posed by Judge William Alsup: Did Anthropic violate copyright law by copying millions of books, some of which were illegally pirated and others legally purchased works, to train its AI models?

The ruling marks a pivotal moment in how courts interpret “fair use” in the context of generative AI and sets new precedent for how tech companies, publishers, and brands approach content access, training data, and discoverability.

Whether you’re advising on legal risk, leading marketing strategy, or managing your brand’s presence in AI-generated outputs, this case changes the rules of engagement.

Table of Contents

Key Findings from the Court

1. Using Copyrighted Books for AI Training Is Fair Use

Using copyrighted books to train Anthropic’s large language model, Claude, is considered “exceedingly transformative” and thus qualifies as fair use.

Because Claude’s outputs are not verbatim reproductions, the model is not seen as directly competing with authors. Instead, it “adds something new” rather than exploiting the original expression.

2. Digitizing Lawfully Purchased Books Is Fair Use

Anthropic legally purchased printed books, scanned them for training, and destroyed the physical copies.

The court found this method does not constitute redistribution and is protected by fair use. There was “no evidence of any redistribution or commercial substitution,” which satisfied a critical fair use factor.

3. Using Pirated Books Is Not Fair Use

Anthropic’s use of pirated books was found to be infringing.

Even though the company later purchased legal copies of those books, the judge wrote: “Anthropic’s belated effort to buy these books legally does not absolve it of liability for its initial infringement.”

What the Judge Said: Notable Quotes

Page 12:

“To make anyone pay specifically for the use of a book each time they read it, each time they recall it from memory… would be unthinkable… For centuries, we have read and re-read books. We have admired, memorized, and internalized their sweeping themes, their substantive points, and their stylistic solutions to recurring writing problems.”

Page 13:

“Yes, Claude has outputted grammar, composition, and style that the underlying LLM distilled from thousands of works. But if someone were to read all the modern-day classics because of their exceptional expression, memorize them, and then emulate a blend of their best writing, would that violate the Copyright Act? Of course not.”

Page 28:

“[The] authors’ complaint is no different than it would be if they complained that training schoolchildren to write well would result in an explosion of competing works… The Act seeks to advance original works of authorship, not to protect authors against competition.”

Page 30:

“The technology at issue was among the most transformative many of us will see in our lifetimes.”

What’s Next for Bartz v. Anthropic?

The case will now go to trial to address the claims related specifically to pirated books. The court will determine whether infringement occurred, whether it was willful, and what damages may be owed.

Why This Ruling Matters

This is the first major decision affirming that using copyrighted material, when properly acquired, is legally protected under fair use for AI training purposes. That clarification strengthens legal defenses for AI companies and significantly reshapes how brands and publishers think about their data, their terms, and their role in generative discovery.

What This Means for Future AI & Web Scraping Lawsuits

Fair Use Defense Strengthened

This ruling will likely embolden companies like OpenAI, Meta, and Google to defend AI training practices based on “transformative use.” That same reasoning may soon be applied to publicly available web content.

Source Legitimacy Becomes Critical

As this case made clear, how data is acquired matters. Web scrapers that bypass terms of use, robots.txt, or llms.txt directives may lose the protection of fair use entirely.

Terms and robots.txt May Be Treated as Legal Boundaries

Cases like Reddit v. Anthropic may hinge on whether courts consider a site’s robots.txt file, llms.txt file, and site terms implement legally enforceable limits. If so, ignoring them could lead to liability—regardless of output quality.

Training vs. Output Will Be Treated Separately

This ruling covers training only. If a model is later shown to produce verbatim or stylistically similar outputs, separate legal challenges could emerge, as seen in art and code-related LLM cases.

What Brands Should Do Now

1. Reevaluate Your robots.txt and Terms of Use

Your robots.txt file is no longer just for SEO. It defines whether AI models can “remember” your brand in the new era of generative recall.

- Allow LLMs if you want brand visibility in AI-generated answers (product info, FAQs, positioning).

- Disallow them if you’re protecting premium IP or restricting unauthorized reuse.

2. Implement a llms.txt file

Have you implemented a llms.txt file? Similar to a robots.txt file, this piece of technical code provides crucial guidance for LLMs seeking to crawl the content on your website.

3. Align Legal and Marketing Strategy

Work cross-functionally to review:

- Terms of use: Do they prohibit automated scraping?

- robots.txt and llms.txt directives: Do they reflect your AI visibility goals?

- APIs and content feeds: Are you offering secure, licensed access to brand content?

4. Optimize for LLM Discovery

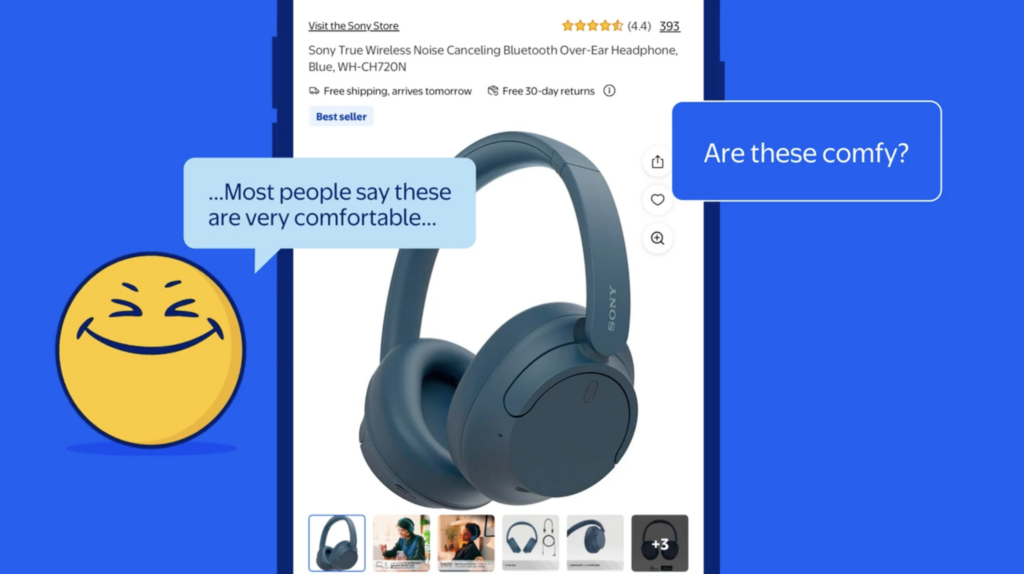

If your content is crawlable for LLMs, optimize it like you would for SEO:

- Use clear, canonical messaging.

- Apply structured data and schema markup.

- Ensure product descriptions and brand claims are AI-readable.

5. Prepare for Licensing Discussions

Expect more licensing deals between publishers, brands, and LLM providers. Be ready to:

- Audit how your content is being used in AI models.

- Decide when to restrict, monetize, or strategically allow access.

- Shape your brand’s presence in ChatGPT, Claude, Gemini, and other AI platforms.

Want to Shape Your Brand’s AI Visibility?

Avenue Z’s award-winning AI Optimization solution helps brands secure visibility at the top of AI search results: where trust, traffic, and growth begin. We help you stay compliant, visible, and in control. Talk to our experts today to learn how we can align your brand strategy with the next generation of AI discovery.

Optimize Your Brand’s Visibility in AI Search

Millions turn to AI platforms daily to discover and decide. Make sure they find your brand.