Web crawlers, often referred to as bots or spiders, are essential tools for navigating and indexing the vast expanse of the internet. They systematically browse web pages to collect data, enabling search engines and other platforms to organize and retrieve information efficiently. With the advent of artificial intelligence (AI), a new breed of crawlers has emerged, fundamentally transforming how web indexing is conducted.

This evolution is particularly relevant for brands looking to enhance their digital presence through AI Optimization (AIO), a process that leverages machine learning and automation to improve visibility, searchability, and overall content effectiveness. This article delves into the distinctions between traditional and AI Crawlers, highlighting their unique methodologies and the implications for web indexing.

Table of Contents

Traditional Web Crawlers: Broad and Systematic Indexing

Traditional web crawlers, like Googlebot, are designed to scan and index as much of the internet as possible, ensuring that search engines have a vast database of web pages to retrieve relevant results. They operate by systematically following links from one page to another, retrieving HTML content, and storing structured data that helps search engines rank and display information efficiently. By continuously crawling and updating their index, these bots ensure that users receive the most up-to-date and relevant search results based on their queries.

- Prioritize comprehensive coverage, aiming to index the entire web for general search queries.

- Follow predefined rules, such as sitemaps and robots.txt directives, to determine what can be indexed.

- Struggle with dynamic content, often requiring extra processing to handle JavaScript-heavy pages.

While effective for general search, traditional crawlers focus more on scalability than on deep content understanding. They catalog web pages based on metadata, keywords, and links, but they don’t fully interpret meaning or intent.

AI Crawlers: Targeted and Context-Aware Indexing

AI crawlers, on the other hand, are more selective in how they scan and collect data, prioritizing quality and relevance over sheer volume. Instead of broadly indexing the web, they focus on extracting specific types of content that align with AI model training, contextual understanding, or specialized search needs. By leveraging machine learning, these crawlers can analyze, interpret, and adapt to evolving web structures, making them far more sophisticated in how they process and prioritize information.

- Use machine learning to analyze content beyond keywords, identifying deeper relationships between topics.

- Adapt dynamically to website structure changes, ensuring more accurate and up-to-date indexing.

- Can process non-traditional content types, including dynamically generated pages, multimedia, and structured datasets.

For instance, platforms like OpenAI’s GPTBot and Google’s GoogleOther are designed to gather data to train large language models (LLMs), enhancing their ability to generate human-like text and understand complex queries. These AI crawlers don’t merely index content for search engines; they analyze and learn from the data to improve AI models’ performance.

AI crawlers are used for more than just search engines—they help train AI models, refine language understanding, and improve personalized recommendations. Their ability to interpret meaning and context makes them a powerful tool for the future of search and AI-driven content discovery.

Key Differences Between AI Crawlers and Traditional Crawlers

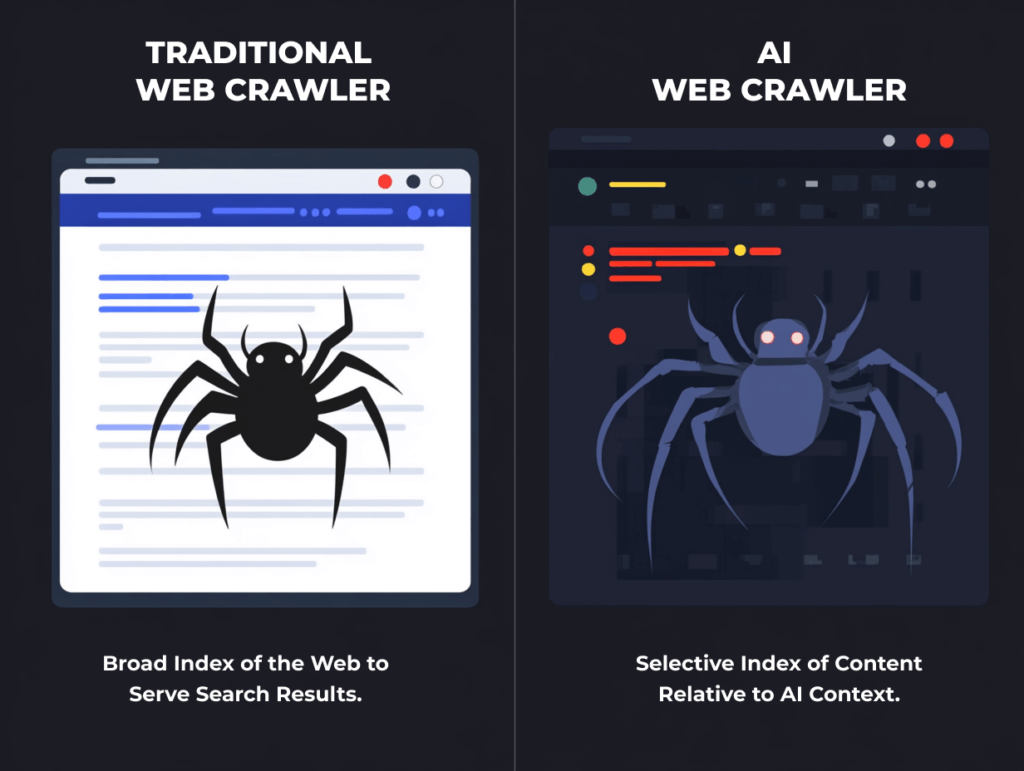

Traditional web crawlers use broad index of the web to serve search results. AI web crawlers perform a selective index of content relative to the AI context.

1. Data Processing and Analysis

- Traditional Crawlers: Focus on keyword matching and basic metadata extraction, which can limit understanding of content nuances.

- AI-Powered Crawlers: Employ advanced natural language processing to grasp context, sentiment, and deeper meanings, leading to more sophisticated indexing.

2. Adaptability

- Traditional Crawlers: Require manual updates to handle new web technologies or structural changes in websites.

- AI-Powered Crawlers: Utilize machine learning-driven adaptability to autonomously adjust to new content formats and site architectures, reducing the need for constant manual adjustments.

3. Content Interaction

- Traditional Crawlers: Primarily retrieve static content, often struggling with dynamic elements like JavaScript-rendered content.

- AI-Powered Crawlers: Can execute scripts and render dynamic content, accessing information that traditional crawlers might overlook.

| Traditional Web Crawler | AI Web Crawler |

| • Broadly indexes web pages by following links and collecting basic data • Uses fixed rules, struggling with dynamic or JavaScript-heavy content • Focuses on text-based content with limited language understanding • Operates on set schedules, providing raw data for indexing • Less resource-intensive but lacks adaptability • Collects data broadly without deep analysis • Limited to basic web scraping and link-following | • Collects targeted data for AI training, understanding context and meaning • Adapts in real-time, handling dynamic content and various formats • Processes natural language, including context and intent • Operates on-demand or continuously, adjusting to real-time needs • More resource-intensive but extracts deeper insights • Performs selective data collection for specific AI projects • Uses machine learning for content understanding and relationship mapping |

Implications for Webmasters and Content Creators

The rise of AI crawlers means webmasters and content creators must optimize their sites for both traditional search indexing and AI-driven data collection. Ensuring content is structured, accessible, and contextually rich will improve visibility in AI-powered search and machine learning models. Websites with dynamic or JavaScript-heavy content should implement best practices like structured data and server-side rendering to ensure AI crawlers can accurately process and interpret their information.

The rise of AI-powered crawlers brings both opportunities and challenges:

- Enhanced Visibility: AI crawlers can lead to better content understanding and improved search result placements, particularly for brands investing in AI Optimization (AIO) to maximize their digital presence.

- Content Control: Webmasters may need to implement strategies, such as updating

robots.txtfiles, to manage how their content is accessed and used, especially concerning AI model training. - Resource Management: AI crawlers can be more resource-intensive, potentially impacting server load and bandwidth. It’s crucial to monitor crawler activity to mitigate potential strain on web infrastructure.

Conclusion

AI-powered crawlers are redefining the web indexing process by introducing advanced capabilities that surpass traditional methods. Their ability to understand context, adapt to changes, and process dynamic content offers a more comprehensive and nuanced approach to indexing the web. As this technology continues to evolve, brands must adopt AI Optimization (AIO) strategies to ensure their content remains accessible, discoverable, and accurately represented in AI-driven search environments.

Want to ensure your website is optimized for AI-powered indexing and search? Talk to our experts at Avenue Z to build a strategy that keeps your content ahead of the curve.