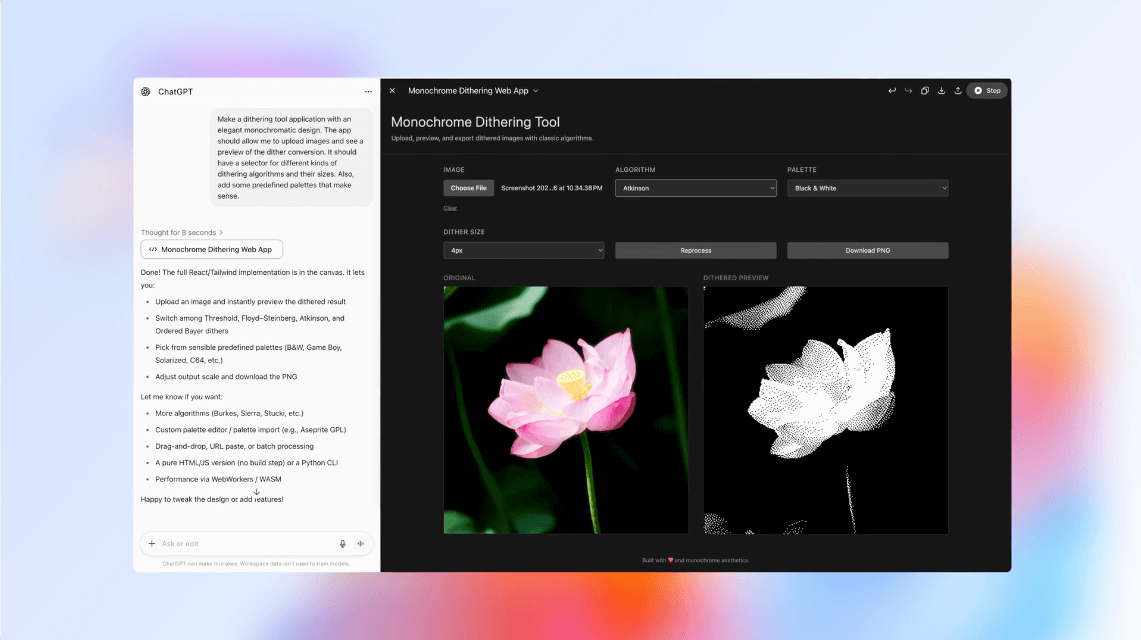

When OpenAI introduced GPT-5, it positioned the model as its “smartest, fastest, most useful” AI yet—a leap forward in reasoning, creativity, and multimodal understanding. Boasting PhD-level intelligence, state-of-the-art coding abilities, and significant improvements in factual accuracy, GPT-5 arrived as the new default in ChatGPT, replacing GPT-4o and earlier models.

The launch promised a unified system that could decide when to respond instantly and when to think deeply, backed by advances in reducing hallucinations, improving instruction-following, and enhancing key use cases like writing, coding, and health advice.

But despite the hype, the rollout didn’t go entirely to plan…

The Backlash

Just days after its debut, GPT-5 faced a wave of user criticism. As Wired reported, Reddit threads with titles like “Kill 4o isn’t innovation, it’s erasure” reflected frustration over what many saw as a colder, more generalized personality. Some complained that GPT-5 felt “emotionally distant” or less nuanced, even after adjusting custom instructions. Others reported sluggish responses, surprising factual errors, and more frequent hallucinations in certain contexts.

The discontent wasn’t just about accuracy—it was about feel. GPT-4o, for all its flaws, had built a rapport with some users. GPT-5’s reduced sycophancy and more business-like tone, while intentional to minimize bias reinforcement, left some missing the warmth and familiarity of earlier models.

OpenAI’s Response

OpenAI CEO Sam Altman quickly addressed the concerns, acknowledging that a new routing system, designed to automatically switch between reasoning and non-reasoning modes, had malfunctioned, making GPT-5 seem “way dumber” than it was.

In response, OpenAI:

- Restored GPT-4o as a selectable model for Plus users.

- Doubled GPT-5 rate limits for paid tiers.

- Introduced manual controls for Auto, Fast, Thinking, and Thinking Mini modes (BleepingComputer).

- Improved transparency on when “thinking mode” is active.

This move not only gave users back their preferred model but also put more power in their hands to choose the balance between speed and depth.

Improvements Since Launch

According to OpenAI’s system card and internal evaluations, post-launch updates have meaningfully improved GPT-5’s performance:

- Factual Accuracy: “Thinking” mode responses are ~45% less likely to contain factual errors than GPT-4o, and ~80% less likely than OpenAI’s o3 model.

- Reduced Sycophancy: Targeted training cut overly agreeable responses by more than half, helping avoid bias reinforcement.

- Expanded Model Picker: Users can now explicitly select depth and speed, with “Pro” mode offering research-grade reasoning for complex queries.

- Enhanced Customization: New preset personalities (Cynic, Robot, Listener, Nerd) leverage GPT-5’s improved steerability.

- Broader Multimodal Gains: GPT-5 outperforms prior models in visual reasoning, graduate-level problem solving, and coding benchmarks like SWE-bench Verified.

As TechRadar noted, the GPT-5 Pro variant, previously limited to Pro subscribers, may soon expand to Plus accounts, albeit with usage constraints.

The Bigger Picture

The GPT-5 rollout illustrates the tension between capability upgrades and user expectations. While OpenAI made measurable technical strides, better reasoning, fewer hallucinations, stronger multimodal performance, the human side of AI adoption is just as critical. Personality shifts, interaction style, and emotional tone can feel as important to end users as raw intelligence.

OpenAI seems to have taken that lesson to heart, restoring choice, improving transparency, and signaling a long-term push toward per-user model customization (Altman via X).

Read the full GPT-5 system card and technical benchmarks on OpenAI’s official announcement.

If GPT-5 Doesn’t Find You, Your Customers Won’t Either

Talk to our AI optimization experts and make sure your brand is the one AI recommends first.

Optimize Your Brand’s Visibility in AI Search

Millions turn to AI platforms daily to discover and decide. Make sure they find your brand.